Introduction

Finding Linux servers heavily reliant on Sudo rules for daily management tasks is a common occurrence. While not necessarily bad, Sudo rules can quickly become security’s worst nightmare. Before discussing the security implications, let’s first discuss what Sudo is.

Defining Sudo

What is Sudo? Sudo, which stands for “superuser do!,” is a program that allows a user’s execution of commands as other users, most commonly as root, the “administrator” equivalent for Linux operating systems; think “runas” for Windows. Sudo enables the implementation of the least privilege model, in which users are only given rights to run certain elevated commands based on what’s necessary to complete their job. Without Sudo, we would need to log into the server or desktop as root to perform an update, change network settings, remove users, or perform any other privileged task. Nothing bad could come from that right?

A Brief Education of Sudo

This post isn’t about how Sudo is a bad program, but instead Sudo’s common misconfiguration. Some Linux shops use LDAP for the centralization of Sudo rules for a machine, but, commonly the file /etc/sudoers is the standard method of management. This file may look intimidating, but for the sake of this post, we are only going to talk about how users are assigned rules.

#

# This file MUST be edited with the ‘visudo’ command as root.

#

# Please consider adding local content in /etc/sudoers.d/ instead of

# directly modifying this file.

#

# See the man page for details on how to write a sudoers file.

#

Defaults env_reset

Defaults mail_badpass

Defaults secure_path=”/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin”

# Host alias specification

# User alias specification

# Cmnd alias specification

# User privilege specification

root ALL=(ALL:ALL) ALL

paragonsec ALL=(ALL:ALL) /bin/cat

# Allow members of group sudo to execute any command

%sudo ALL=(ALL:ALL) ALL

# See sudoers(5) for more information on “#include” directives:

#includedir /etc/sudoers.d

/etc/sudoers file example

The lines highlighted in the above example are what will be discussed in this post. This controls which users can run Sudo and what program they can run. Multiple entries can exist per user to facilitate running different programs under different user contexts depending on requirements. The format of a basic user Sudo rule is the following:<user> <host>=(<user run as>:<group run as>) <command>

In the above /etc/sudoers file we see the user “Paragonsec” has the rule “ALL(ALL:ALL) /bin/cat”. This grants the user “Paragonesc” the ability to execute /bin/cat with Sudo on any host, as any user, as any group. While this might not seem like a terrible thing we can use /bin/cat’s functionality to print out any file on the system as root. Maybe something /etc/shadow.

The Problem with Sudo

With basic knowledge of Sudo and /etc/sudoers the hunt for vulnerabilities is on. During engagements, I consistently see 2 scenarios as it relates to Sudo rules.

- A complete lack of configuration where the Sudo rules don’t follow the least privilege model and allow for many users to execute commands as root.

- A relatively secure set of Sudo rules, but the command assigned is a rarely used argument that allows the execution of shell commands.

Either way, Sudo has the potential to provide many opportunities to elevate your privileges in Linux, and often abusing Sudo rules is the quickest way to gain root privileges.

One might quickly point a finger at the Linux administrators for this oversight, but they aren’t the only culprits. Time and time again I come across routers, switches, cloud servers, and more that come with weak Sudo rules. The usual argument for this is “the user is an admin and should have the ability to execute commands as root.” If that’s the case, let’s just say “Root for everyone!”

This isn’t a reasonable option, so we need a way to secure Sudo. The best way to secure anything is to know how it can be abused by testing it, and for this, we now have Fall of Sudo.

Fall of Sudo

During an engagement leveraging these rules I thought, “how could I keep a record of all the insane wizardry Sudo privilege escalations I have seen people use?” The solution: “Fall of Sudo”; a script compiling a collection of Sudo rule privilege escalations I have either by looking at man pages or picked up from Linux gurus.

The purpose of this tool is to teach red teamers and blue teamers various ways to leverage Sudo rules on commands ranging from bash to rsync to gain root!

Currently, two features exist:

- information feature – This queries your current users’ Sudo rules and displays rules that could be leveraged to gain root. Upon selection, the rule will be presented with an l33t printout showing which commands to execute in order to exploit the rule.

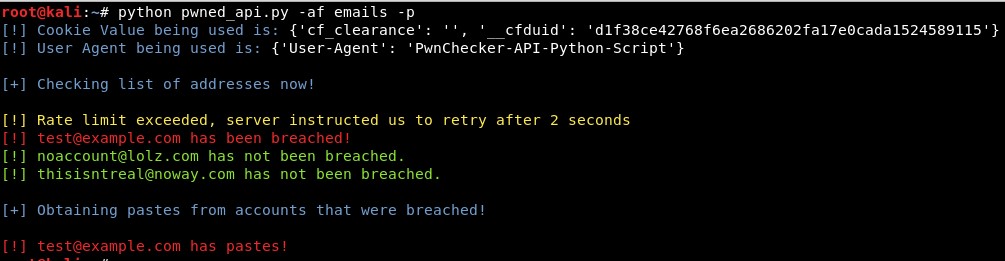

Output showing how /bin/cat can be abused to get the contents of /etc/shadow

- “autopwn” feature – This was created for one reason… to anger a certain Linux Wizard I know! If you know what you are doing, and have READ THE SCRIPT, then, by all means, make your life easier and just autopwn those Sudo rules.

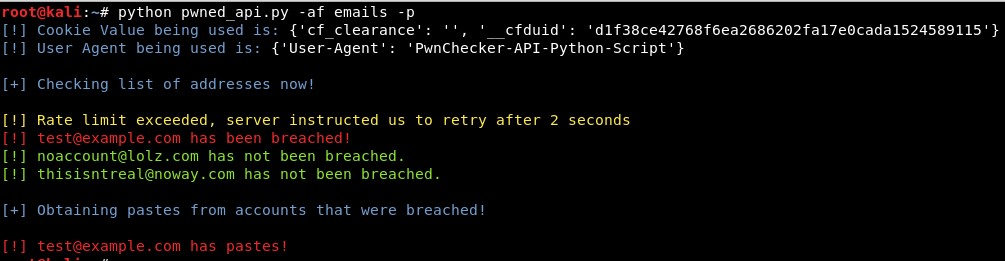

Showing the ALL/ALL being abused to get root

It is important to note that I have not added extended rule support (e.g. /bin/sed s/test/woot/g notarealfile). I highly suggest you use the information functionality, so you can adjust the pwnage to your needs, and always test the rules in a controlled environment.

Is this it? Absolutely not! I plan on taking others’ input and expertise to add more Sudo rules as they become available. Version two is currently being planned, and thus far I have the following as possible additions:

- Added module support

- Addition of a learning function that shows and explains the exact issue with the Sudo rule

- Support for expanded rules

- Support for Sudo environment variables

- Creation of a VM that would allow hands-on pwnage of varying Sudo rules

How You Can Help

I envision this growing even further to help both red teamers, blue teamers, and Linux admins. I recognize that I am not the leading expert on Linux by any means, and this is where the community comes in. If you care to help grow this project, please open an issue on GitHub and share your ideas!

by Quentin Rhoads-Herrera | Offensive Security Manager

May 21, 2018